This post was written by NCTE member Alexa Quinn, associated with the NCTE Standing Committee on Literacy Assessment.

In Part 1 of this series, Chris Hass illustrated the potential of student-generated questions (SGQs) to reorient discussion and foster deeper understanding while centering student voices. In Part 2, I explore ideas for how teachers might learn from the questions students generate.

A few weeks ago, I was reading with my three-year-old son before bed. Thinking of his relatively new appreciation for stuffed animals, I opened Mo Willems’s Knuffle Bunny, the 2004 “cautionary tale” (per the subtitle) about a beloved bunny left at a laundromat. A yellow sticky note fluttered to the floor.

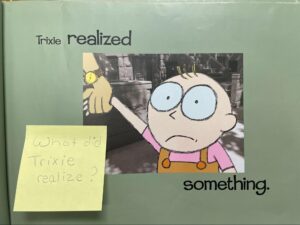

Recognizing a child’s handwriting, I recalled the day that my former fourth-grade students had been asked to share books with kindergarteners for World Read Aloud Day. The sticky notes sprinkled throughout the pages held the questions that a student had planned to ask her younger partner about the story.

“Is Trixie really helping with the laundry?”

“What does it mean to go ‘boneless’?”

“Why was her daddy unhappy?”

As I read through the questions, I was struck by how much information was contained in these handwritten probes. I wondered how SGQs might be used as assessment both of and for learning, especially when emphasizing culturally responsive practices.

What Can We Learn about Readers from the Questions They Ask?

Questions that readers generate provide powerful insight into their understanding. Recent research has revealed the strong relationship between the quality of SGQs and student understanding of a text (Maplethorpe et al., 2022). Importantly, this research also showed that many students with lower scores on traditional assessments of reading comprehension were able to craft insightful questions about the text. The authors conclude, “Using SGQ with a variety of genres in assessments may produce a more authentic and holistic picture of what diverse students know and understand while enhancing valuable student agency within the process” (Maplethorpe et al., 2022, p. 89).

Keeping track of the types of questions individual readers ask is one way to create a “picture” of knowledge and skill development to inform instruction. For example, reviewing my student’s sticky notes led me to notice she was primarily asking questions that could be answered using the words and illustrations alone (“Where did Trixie go with her daddy?” “How is she feeling now?”). This gives me insight into the plot elements she was attending to successfully, as well as some ideas about what I might prompt next (e.g., theme or lesson learned).

These sticky-note questions written for younger students likely differ from the metacognitive questions that readers might ask themselves as they make sense of a text (as Chris Hass described in Part 1). When using SGQs as a form of assessment, then, the prompt matters. Who is the audience for the questions students are generating? Preparing for a read-aloud with a younger student provided an authentic purpose for generating questions. Other possibilities include writing questions for a peer, the teacher, the text’s author, or a subject expert. Explicitly asking students to generate questions for an authentic audience is one avenue for formalizing the collection of SGQs as assessment data.

How Should We Evaluate Student Questions?

Researchers have taken several different approaches to evaluating SGQs. Some have used binary rating systems, such as literal versus inferential (Davey & McBride, 1986) or factual versus conceptual (Bugg & McDaniel, 2012), while others have placed questions on hierarchies inspired by Bloom’s taxonomy (e.g., Taboada & Guthrie, 2006). Recent work aims for a more fluid approach using three mental models (text-processing, situational, and critical) that include additional gradations and can be applied to both narrative and expository texts. Rating tools such as these could facilitate evaluation of SGQs in a formal assessment context.

Regardless of the specific tool, our goal should be to learn from SGQs while honoring students, their cultural knowledge, and their lived experiences. A brief noticing statement in an observation log is a simple way to capture trends in questioning from individual students (e.g., “C’s questions focused on character motivation; included personal connection to a lost Gizmo watch.”) A specific “look-for” targeting student questions could be a focus of kidwatching on a recurring basis. On a larger scale, gathering sticky notes from a whole class to sort and organize in different ways could reveal topics or skills that need additional attention.

For educators who already prompt SGQs in their practice, what might it look like to select SGQs from the constant flow of information in a classroom setting and be intentional about using student questions to better support literacy learning?

What Can We Learn about Our Own Teaching from the Questions Students Ask?

The use of SGQ is often framed as a metacognitive strategy to improve comprehension (Cohen, 1983). But by viewing student questions as a means to an end rather than a worthy product in their own right, I suspect we might be missing out on important information that can support our growth.

On the sticky notes tucked into Knuffle Bunny, I recognized question stems I had likely been modeling regularly as I read with my class. For example, I noticed many queries about character feelings and cause and effect, but fewer about the author’s choices or other elements of critical literacy. My students’ questions served as a mirror of my own practice—what had I been communicating to my students about the types of questions that support making meaning? SGQs provide a vital opportunity for teacher self-reflection.

References

Bugg, J. M., & McDaniel, M. A. (2012). Selective benefits of question self-generation and answering for remembering expository text. Journal of Educational Psychology, 104(4), 922–931. https://doi.org/10.1037/a0028661

Cohen, R. (1983). Self-generated questions as an aid to reading comprehension. The Reading Teacher, 36(8), 770–775. https://www.jstor.org/stable/20198324

Davey, B., & McBride, S. (1986). Effects of questioning-generation training on reading comprehension. Journal of Educational Psychology, 78(4), 256–262. https://doi.org/10.1037/0022-0663.78.4.256

Maplethorpe, L., Kim, H., Hunte, M. R., Vincett, M., & Jang, E. E. (2022). Student-generated questions in literacy education and assessment. Journal of Literacy Research, 54(1), 74–97. https://doi.org/10.1177/1086296X221076436

Taboada, A., & Guthrie, J. T. (2006). Contributions of student questioning and prior knowledge to construction of knowledge from reading information text. Journal of Literacy Research, 38(1), 1–35. https://doi.org/10.1207/s15548430jlr3801_1

Alexa Quinn is an assistant professor at James Madison University. Her teaching and research focus on preparing elementary teachers to plan equitable and inclusive instruction and assessment across content areas. She is a former fourth-grade teacher and elementary curriculum specialist. She can be reached at quinn4am@jmu.edu.

It is the policy of NCTE in all publications, including the Literacy & NCTE blog, to provide a forum for the open discussion of ideas concerning the content and the teaching of English and the language arts. Publicity accorded to any particular point of view does not imply endorsement by the Executive Committee, the Board of Directors, the staff, or the membership at large, except in announcements of policy, where such endorsement is clearly specified.